The recent popularity of artificial intelligence, particularly after the introduction of large language models (LLMs) such as ChatGPT, has reignited an age-old debate. The debate whether technological progress is inherently good or bad? Since the beginning of humanity this debate keeps reappearing from time to time. It keeps resurfacing with each disruptive wave of innovation right from the earliest ‘Printing Press’ to the most modern invention ‘The Internet’. And history repeats itself with the recent innovation in AI.

I believe one should either get into a debate with an open mind or don’t get into one at all. And in the debate of AI there are valid and sound arguments present on both sides of the fence. However, in my opinion any new innovation or technology is neither good or bad by itself and it all depends on how we choose to use it. And this is true with AI as well. AI in itself is just a tool (at least until it gains sentience) and it is neither good nor bad. Its impact on society, good or bad, will be decided by how we decide to use and regulate it.

Critics of AI have many reservations, some seem valid, some too far fetched. They often point out the risk to the job market brought by widespread AI driven automation. Which will further lead to a greater social and economic inequality. Meanwhile some critiques paint a dystopian future where humanity faces annihilation by its own creations. Regardless of the validity of these concerns, what matters here is that society must address them before fully embracing AI.

Another big challenge with AI is accountability. As we shift more and more decision making tasks to AI – ranging from loan approvals to assigning military targets, we must ask the question : who will be held accountable for the decisions taken by the automated system. Should we hold the developers accountable, or is it the AI itself? The legal and ethical frameworks around AI are still catching up, often lagging behind the rapid pace of technological advancement.

Beyond accountability, AI’s disruption in industries like law, art, and creativity has raised new questions. How do we define art and creativity when a machine can generate it? What distinguishes AI-generated work from human-created art? And who owns AI-generated content—the developer of the model, the user who provided the input, or the AI itself?

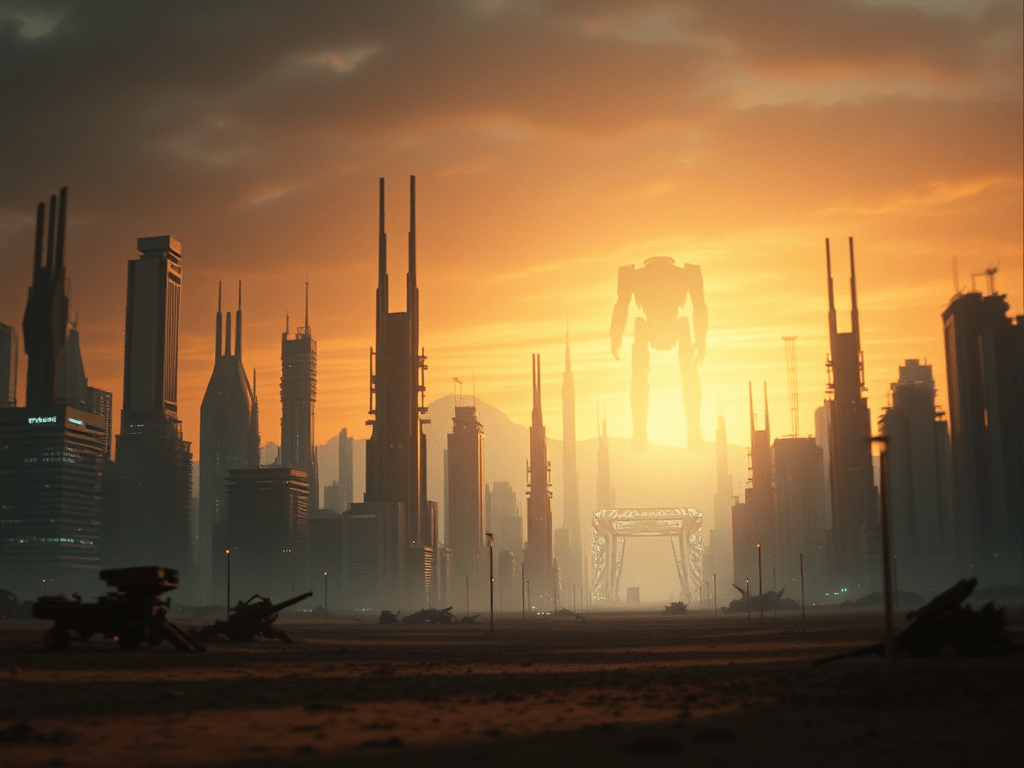

Frank Herbert’s Dune series offers a cautionary lens through which to view our AI-driven future. In the universe of Dune, humanity’s technological overreach led to the Butlerian Jihad, a rebellion against thinking machines in which all thinking machines were destroyed and their use forbidden forever. One of Herbert’s most poignant lines captures this tension:

“Once men turned their thinking over to machines in the hope that this would set them free. But that only permitted other men with machines to enslave them.”

— Frank Herbert, Dune

As we navigate our own era of AI disruption, we must reflect on these cautionary tales. Technology offers immense potential, but without thoughtful regulation and ethical consideration, we may find ourselves facing consequences for which we may not be ready. Like the people of the Dune universe, we are at a pivotal moment, where our choices today could shape the trajectory of humanity for generations to come.

Leave a comment